the audio player

Every thing that heard him play,

Even the billows of the sea,

Hung their heads, and then lay by.

In sweet music is such art,

Killing care and grief of heart

Fall asleep, or hearing, die.

Henry VIII

1 the idea

Without a player, records are just dead weight.

Books, you can pick up and read. Pictures, you can look at. But recordings depend on some kind of mechanism to be heard.

The player is the thing in willshake that you can use to listen to its collection of recordings.

Right now, the player is only used for audio. But I’m just going to call it “the player” because it’s shorter than “the audio player,” and if at some point this is generalized to include video, many of the same considerations will apply.

1.1 a tour guide

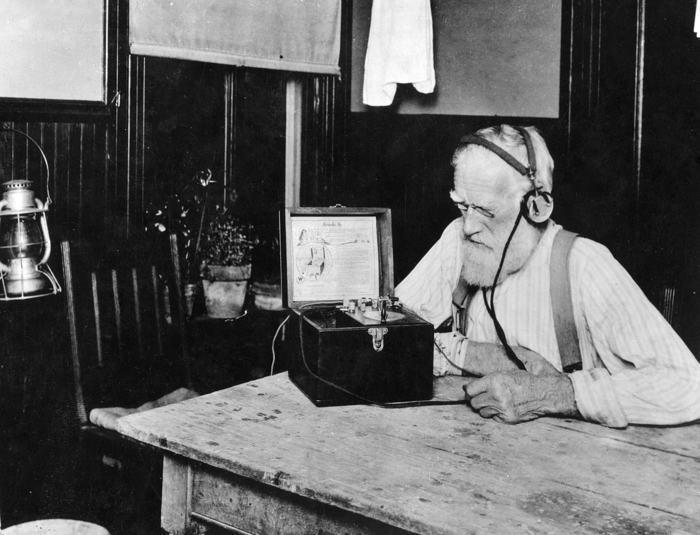

If you think of willshake as an exhibit that you can walk around, the player is like one of those little portable devices (usually a handset and a headset) that are sometimes used to provide self-guided tours of exhibition spaces, such as the one seen in Figure 2.

I like this analogy because it captures the how the listening experience should feel.

First of all, it’s optional. It’s an enhancement to a space that is still worth exploring without it, and it doesn’t get in the way of that.

If you do choose to use it, you’re free to use it how you want. Most recordings will trace a certain path through the exhibit, but you’re not constrained to follow along. You’re free to wander around the entire site while listening to any recording.

And of course, you can only listen to one thing at a time, so only one player is needed. Many places will have one or more recordings available. It should always be clear what recordings are available, and it should be easy to listen to any of them, or switch between them. The player is easy to access, but easy to ignore when you’re not using it.

1.2 conducting through hyperspace

This music crept by me upon the waters,

Allaying both their fury and my passion

With its sweet air. Thence I have follow’d it,

Or it hath drawn me rather.

The Tempest

But this is not a physical exhibit—this is hyperspace. You can be “spirited” from one place to another, instantly or gradually, and willfully or passively.

The player, acting as a guide, can conduct you through the space. “Conduct” derives from the Latin conducere, which means “to bring or lead together."1 The root ducere ultimately traces to a word meaning “to draw” or “drag."2

So just as in The Tempest Ariel draws Ferdinand on through the island with his music, the player can lead you through willshake while you look and listen.

You can listen to one thing while exploring something else. And you can always jump straight to the place that you’re listening to.

2 listen locations

A listen location is just a way of pointing at an audio program in the site, using web addresses. Just as you can use web addresses (also known as URL’s) to point to any specific place in willshake’s text, you can also link to any point in the recordings of those texts. (Whether or not visiting the address should trigger playback is another question that I’ll come to later.)

Figure 5 shows the breakdown of a listen location. Of course, all

listen locations will start with https://willshake.net, which is not shown here.

Both the hash and underscore are problematic in this diagram during PDF export; hence the escapes. See here.

Most of this document is about making listen locations work.

3 the audio module

The player requires script. If your browser doesn’t have script enabled—or doesn’t support script at all—then there is no audio player.

If your browser supports audio but you want to listen without using script, you can use the direct links to the recordings, which will open in another tab. In most browsers, this will cause playback to start right away. Then, you can return to the original tab and explore the site freely while listening.

Why not provide a built-in player anyway? Without script, listening to recordings inside of willshake would be unpleasant. Script makes it possible to navigate the site while listening, which is essential for programs that span over several sections (as most of them do). Script also makes it possible to coordinate playback with text, which is a main motivator of the player’s existence.

So, the player is implemented entirely by a script module. It is present throughout the site, since you can listen to audio anywhere.

<require module="audio" />

define(['getflow'], getflow => {

<<the audio module>>

});

Exactly what it does is defined in the remainder of this document. In order to deal with each topic in the most natural order, the following placeholders are defined to allow the necessary placement in the program. See literate programming.

console.log("audio: starting init");

<<constants>>

<<independent functions>>

<<state>>

<<bound functions>>

<<one-time setup>>

<<startup actions>>

<<audio exports>>

4 scopes and programs

Audio scopes are discussed in the recordings. Throughout the site, there are many scopes—i.e., places with relevant recordings.

Now, suppose that you start listening in one place (scope), and then you wander off. You’re still listening to the recording you started, but you end up in another scope, where different recordings (programs) are relevant.

The player, of course, is concerned with the program that you’re listening to.

But only two will be of interest at any given time: the one you’re visiting, and the one you’re listening to.

But what about the visiting scope? Who’s concerned about it? Well, the visiting scope is used to provide affordances for starting playback.

If there is audio for the current scope, then its contents will be listed in the document, as implemented in the recordings.

At the moment, that’s what I’m using

function recordings_for_this_scope() {

return array_from(document.querySelectorAll(

'#audio-scope [data-audio]'))

.map(ele => ele.getAttribute('data-audio'));

}

This is really an expedient, depending on markup in this way. And it doesn’t allow you to look up the available programs for arbitrary scopes. Alternatively, I could package the (necessary parts of) the whole audio catalog and read it directly. The downside of that would be that you’d retrieve more than you really need for a single scope.

4.1 audio ships with the site

And of course, before the web site can hope to play any audio, it has to have access to the files.

: $(ROOT)/Tupfile.ini |> ln --symbolic --relative $(AUDIO) %o |> $(SITE)/static/audio

Since the audio files are big, I just “link” to their master location so that

unnecessary copies aren’t made during deployment. (The link_from macro doesn’t

work for directory links because you can’t specify a directory as the input to a

Tup rule.)

4.2 recording metadata

The recording catalog needs to be available to the site, so that it can know what’s in scope (except that it’s already doing that through markup). And what the base path is for each recording. And the title of the recording. And all that for out-of-scope recordings.

const await_catalog = get_json('/static/doc/audio.json');

This is kind of ad-hoc. Only some of it is used at the moment. The objective is to move the scope-specific stuff out of the script and into the data.

<xsl:output method="text" />

<xsl:variable name="q">"</xsl:variable>

<xsl:variable name="index"

select="document('../../database/works-index.xml')" />

<xsl:template match="audio-index">

<xsl:text>{</xsl:text>

<xsl:for-each select="audio">

<xsl:if test="position() > 1">,</xsl:if>

<xsl:value-of select="concat($q, @key, $q, ':')" />

<xsl:text>{</xsl:text>

<xsl:text>"of":"</xsl:text>

<xsl:value-of select="$index//play[@key = current()/of/@play]/@unique_title" />

<xsl:text>","tag":"</xsl:text><xsl:value-of select="tag" />

<xsl:text>","path":"/plays/</xsl:text><xsl:value-of select="of/@play" />

<xsl:text>"}</xsl:text>

</xsl:for-each>

<xsl:text>}</xsl:text>

</xsl:template>

: $(PROGRAM)/audio/to_json.xsl | $(ROOT)/data/audio/<all> \

|> ^o audio catalog to JSON %B ^ \

xsltproc %f %<all> > %o \

|> $(SITE_DOCS)/audio.json

4.3 formats

Not all browsers can play all formats. The player can’t proceed very far without confirming that there’s a playable format available.

function get_playable_format_extension(program) {

const audio = get_media_player();

function check(container, codec) {

return audio.canPlayType(`audio/${container}; codecs=${codec}`)

.replace('no', '');

}

if (audio && audio.canPlayType) {

// HARDCODED: right now all audio is available in exactly these two

// formats.

if (check('ogg', 'vorbis')) return 'ogg';

if (check('mp4', 'mp4a.40.2')) return 'm4a'; // AAC format

}

return '';

}

The section formats in the audio document covers the production and deployment of alternate audio formats.

5 the media player

The player is really just a container.

(() => {

const the_player = document.createElement('div');

the_player.innerHTML = `

<<the player markup>>

`;

document.body.appendChild(the_player).id = 'the-player';

}());

The actual playback is handled by an audio element. So at the very least, an

audio element will be needed.

<audio id="the-player-audio" class="player-audio"></audio>

function get_media_player() {

return document.getElementById('the-player-audio');

}

An audio player looks like you’d expect—a little box with buttons and knobs. These will look different from one browser to another, and will generally be too complicated. So I’m just going to hide it.

#the-player-audio

display none

Instead, the audio element will be used “in the background,” meaning that this

module will be responsible for telling it what to do and when.

If stylesheets are disabled, then the audio player would be visible. For now I’m not taking that into consideration, but in principle everything else should still work. The listen links would not be visible, since they use pseudo-elements.

Surely you’ve experienced “buffering.” Well, that’s what it takes to stream large media files over unpredictable networks. This fairly generic function turns an HTML5 media element into a promise, which resolves when the element can play back.

function when_ready_to_play(media) {

if (media.readyState >= HAVE_FUTURE_DATA)

return resolve_now();

return next_time(media, 'canplay');

}

6 cues

Does this go at some higher level, like with audio or the recordings?

Like vinyl records, digital media affords random access: you can start listening at any point you choose. Records also let you “see” a meaningful division of the program, so you can start where you want to. In the plays, “scenes” are the large divisions; “chapters” are the equivalent in books. We can go even further: it should be possible to start playback at any point represented in a recording, down to the specific line.

To that end, we define the following terms:

- cue

- a binding between a point in the text and a point in an audio program

- cued line

- a line of the currently open section with an associated cue

- current line

- the cued line that is associated with the audio program’s current playback position

Sometimes, the intent to listen will include a specific cue. This will usually be the case with listen links, for example. In those cases, obviously, playback will begin at the indicated cue.

Where do the cues come from? See audio development.

An audio track can enter the same section more than once at a different point (for instance the song in Ado 2.3 is revisited at the end of 3.1); in other words while the cue timings proceed sequentially through the audio, the same is not necessarily true of the corresponding lines.

Nonetheless, most successive cues will belong to the same section, so line cues don’t refer to the section. The structure assumes that a recording will never revisit the same line (although it does happen very briefly at the end of Ado 3.3).

Although it should generally work with our existing infrastructure, cueing of stage directions is not currently supported, in particular, as the uniqueness of their id’s is not yet guaranteed.

6.1 a note on WebVTT

WebVTT (Web Video Text Tracks) is the standard means of associating timestamped metadata with streaming media.3 The following sample of WebVTT format (for the Living Shakespeare production of All’s Well That Ends Well) shows that it’s straightforward to author.

WEBVTT - Living-AWW Testing 00:00:00.000 --> 00:00:17.000 (Introductory music) Helenas_father 00:00:17.000 --> 00:00:25.000 Helena's father, Gerard de Narbon, a famous physican has recently died, leaving her in the care of the Countess of Rousillon. The_countess 00:00:26.000 --> 00:00:31.000 The countess' only son, Bertram... is leaving to serve the king of France, who is very ill. Bertram_accompanied 00:00:36.000 --> 00:00:41.000 Bertram, accompanied by an old lord, Lafeu, takes leave of his mother.

Structured metadata is also supported in place of captions, so although it’s apparently aimed at video subtitling4, WebVTT is suitable—in principle—for any cueing application.

So why doesn’t willshake use WebVTT instead of a custom cue format? It did, initially. But ultimately, it’s easier to do it yourself. Like most web features, WebVTT isn’t available in all browsers, so it would have to be “shimmed” anyway for browsers where it wasn’t supported. And it’s misleading to see that WebVTT is “supported” in all major browsers5, because of inconsistencies in the API. As this document shows, audio tracking can be done with very little code—for our purposes, more easily than a WebVTT shim.

6.2 cue data normal form

The cues are assumed to be stored in normal form. This means:

- cues are in chronological order

- an anchor can only be assoicated with one time

- a cue points to either an anchor or a section

The following transform applies those rules to a set of cues. It also

eliminates immediately successive @section cues.

<xsl:output indent="yes" />

<xsl:template match="/audio-cues">

<xsl:copy>

<xsl:copy-of select="@*" />

<xsl:for-each select="cue">

<xsl:sort select="@at" data-type="number" />

<!-- Skip superceded cues -->

<xsl:if test="not(following-sibling::cue[@a = current()/@a])">

<xsl:copy>

<xsl:copy-of

select="@*

[not(name() = 'section' and . = (../preceding-sibling::cue[@section])[last()]/@section)]

| node()" />

</xsl:copy>

</xsl:if>

</xsl:for-each>

</xsl:copy>

</xsl:template>

I think the following shorter and more idiomatic version is equivalent, but I haven’t tested it yet.

<xsl:import href="identity.xsl" />

<xsl:output indent="yes" />

<xsl:template match="audio-cues">

<xsl:copy>

<xsl:copy-of select="@*" />

<xsl:apply-templates select="cue">

<xsl:sort select="@at" data-type="number" />

</xsl:apply-templates>

</xsl:copy>

</xsl:template>

<!-- Skip superceded cues -->

<xsl:template match="cue[following-sibling::cue[@a = current()/@a]]" />

<!-- Skip successive sections -->

<xsl:template match="@section[. = (../preceding-sibling::cue[@section])[last()]/@section]" />

The transform assumes that the input is in “normal form” (as defined above).

6.3 preprocessing cues

Of course, the audio module needs access to the cues. For that, the data needs to be copied to the web site. To make them easier to process in JavaScript, they are first converted into a (terse) JSON format.

: foreach $(ROOT)/database/cues/*.xml \

| $(ROOT)/program/transforms/cues_to_json.xsl \

|> ^o cues to JSON %B^ \

xsltproc $(ROOT)/program/transforms/cues_to_json.xsl %f > %o \

|> $(ROOT)/site/static/cues/%B.json

This transform emits text tracking metadata in JSON format for use with the the audio module.

It takes as input the audio cues file for a given audio program.

<xsl:output method="text" />

<xsl:variable name="quot">"</xsl:variable>

<xsl:template match="/audio-cues">

<xsl:text>{"cues":[</xsl:text>

<xsl:for-each select="cue[@at]">

<xsl:if test="position() != 1">,</xsl:if>

<xsl:text>[</xsl:text>

<!-- Cue will be either an anchor or a section. -->

<xsl:value-of

select="concat(

format-number(@at, '0.##'),

',', $quot, @a, @section, $quot)"/>

<!-- Indicates to client that this is a section key. -->

<xsl:if test="@section">,1</xsl:if>

<xsl:text>]</xsl:text>

</xsl:for-each>

<xsl:text>]}</xsl:text>

</xsl:template>

The format is is intentionally terse, since the data will have to be transmitted.

6.4 processing cues

With those JSON files available, it’s straightforward to load them. The data structure is optimized for transfer, but not for usage. So instead of providing the raw cue data, they are first indexes.

Isn’t memoize inefficient for an async function, since it has to save the whole

promise, and the XHR as well?

const get_cues_for =

memoize(program =>

get_json('/static/cues/' + program + '.json')

.then(({cues}) => cues_to_index(cues)));

In their raw form, the cues are an array of arrays in the form [time, id,

section?], where section tells whether id is an anchor (line key) or section

key.

But most of the time, you’ll be looking for a specific cue. So, whenever you

ask for the cues, you also get an index of the cues indexed by anchor and

partitioned by section. It also includes a flat array of the cues converted to

objects.

function cues_to_index(cues) {

console.log('INDEXING CUES!');

const anchors = {}, sections = [], all = [];

let i, tuple, id, cue, section;

for (i = 0; i < cues.length; i++) {

all.push(cue = {at: (tuple = cues[i])[0]});

id = tuple[1];

if (tuple[2]) // Partition by section

sections.push(section = {section: (cue.section = id), cues: []});

else // Index by anchor

anchors[cue.a = id] = cue;

if (section)

section.cues.push(cue);

}

return {

all,

cues: anchors,

sections

};

}

Yes, I was in uglify-challenge mode when I wrote this, and I can’t seem to get out of it.

7 player state and coördination

The player is a coördinated system. The parts of the system need to stay on the same page continuously as things happen. For example, when the user touches something on the console, the part of the system that first hears about it must provide a signal so that the other interested parts of the system can respond accordingly. This happens through something called “message passing.” Figure 10 shows a basic publisher/subscriber (or “pub-sub”) view of the system.

You could say that this is the machine interface to the player, which allows programmatic control and communication with the player.

But why go through a single dispatcher? Isn’t a -> b, c -> d, e -> f a simpler model? In other words, why have one dispatcher for more than one event?

The first event that we’re interested in is the intent to listen, which is covered in the next section. Is this part about a specific set of events, or about a general mechanism, where the events will be declared later? And if the latter, then what mechanism is needed, besides the PubSub that we already have?

The player also has to remember certain things about itself, such as which program is currently being listened to.

const the_player_state = {};

8 intent to listen

The player doesn’t play anything until it receives an intent to listen.

const intent_to_listen = PubSub();

The intent can include a specific location where playback should start. If no program or location is specified, just resume where you left off.

intent_to_listen.on((program, spot) => {

const audio = get_media_player();

console.log('audio: intent to listen to ', program, 'at ', spot, 'using', audio);

if (program)

the_player_state.program = program

else

program = the_player_state.program;

// If no program has been chosen at any point, do nothing.

if (!program) return;

// Check whether this type is playable. The "real" version of this would be

// asynchronous, since it would have to look up the program in the catalog.

const format_extension = get_playable_format_extension(program);

if (!format_extension) {

alert("Sorry, this audio recording is not available in a format playable by your browser.");

return;

}

// Prepare to find the exact location and start playback.

when_ready_to_play(audio)

.then(() => get_cues_for(program))

.then(({cues}) => {

if (spot && spot.hash) {

const time = (cues[spot.hash.replace(/^#/, '')] || {}).at;

if (time)

// This was sometimes throwing an error in Firefox about an

// object being no longer "usable", but I haven't figured

// out why.

audio.currentTime = time;

}

audio.play();

});

// Now request the actual audio. This needs to be done after the above

// preparations, because it may trigger the "readiness" right away.

const audio_file = '/static/audio/' + program + '.' + format_extension;

// Don't re-load the same program---that would interrupt playback. The

// value of `src` will be resolved to an absolute URL after it's set, so you

// have to test against the attribute.

if (audio_file != audio.getAttribute('src')) {

audio.src = audio_file;

audio.load();

// HACK to force Firefox to start download. I don't know that `.load()`

// does anything at all, in any browser.

//

// I've removed this and can't reproduce any problem in Firefox or

// Chrome.

//audio.currentTime += .01;

}

});

Intent to listen can be signalled in two ways:

- by using a “play” control

- by navigating to a listen location

8.1 from the URL

Whenever the query says listen=program, that’s interpreted as an intent to

listen to program.

function location_changed({new_place}) {

<<location changed>>

if (new_place && /[?&]listen=([^&]+)/.exec(new_place.search))

intent_to_listen.send(RegExp.$1, new_place);

}

navigating.on(location_changed);

This applies whenever the location changes—meaning that you’ve already entered the site and you follow a link that includes a listen intent.

But it also applies when you first enter such an address.

location_changed({ new_place: window.location });

This is somewhat controversial. Is it acceptable to interpret certain URL’s as signalling an intent to listen? In other words, should the audio player be able to start without the user explicitly asking?

If you believe that a new visitor should not hear any audio until choosing a clearly marked option, then autoplay is user-hostile. Apple takes this position—at least on devices where the user may be charged for using data.

In Safari on iOS (for all devices, including iPad), where the user may be on a cellular network and be charged per data unit, preload and autoplay are disabled. No data is loaded until the user initiates it.6

So autoplay is not available on iOS Safari, not as a matter of neglect, but as a

matter of policy. And while that exception is understandable for that case, the

same question applies generally.

Yet it can be convenient to link directly to a page that starts playback immediately. In such cases, the person would have to be notified out-of-band (i.e. by the person providing the link) that opening it will make sound. For example, the Hacker News submission guidelines stipulate that

If you submit a link to a video or pdf, please warn us by appending [video] or [pdf] to the title.7

I myself have been annoyed by this when children were sleeping.

But of course, how people present links is completely out of willshake’s control. For now, I am allowing auto-play (except on Safari iOS, I suppose), but it remains an open question.

8.1.1 TODO start at the beginning of a section when an anchor is not specified

Right now, listen links will only work as expected if you specify the exact section and anchor where playback should start. (The exception to this is if you specify the first section with no cue, which cause playback to start from the beginning of the program.)

If a section is specified without an anchor, playback should start from the first cue for that section (or the beginning, if it’s the first section).

9 listen links

A listen link is a hyperlink pointing to a listen location. Such links are the primary means of signaling the intent to listen, since I trust that no one will actually type in a URL. Inside of an audio scope, you’ll see these links beside the places where you can start playback, which activates the player. So listen links are really the keys to the audio player.

Whenever you change location, liisten links are shown for the first program are shown.

const [first_program] = recordings_for_this_scope();

if (first_program)

ensure_cue_links(first_program);

This works, but it’s inefficient, considering that we have an index of the cues by section. That would require somewhat specific knowledge of how the route maps to the index. I’ll deal with that in a later iteration.

// Get and display the cue links for the current section.

function ensure_cue_links(program) {

console.log('audio: ensuring cues for program', program)

get_cues_for(program)

.then(({all}) => {

all.forEach(({a}) => {

const ele = document.getElementById(a);

if (ele) {

const id = a + '__cue';

if (!document.getElementById(id)) {

const cue_link = document.createElement('a');

cue_link.id = id;

cue_link.className = 'cue';

cue_link.href = window.location.pathname + '?listen=' + program + '#' + a;

ele.parentNode.insertBefore(cue_link, ele.nextSibling);

}

}

})

});

}

At some point, you’ll want to get a cue link knowing only its anchor.

function cue_link_for(anchor) {

return document.getElementById(anchor + '__cue');

}

Now things get fun. What do the cue links look like? Well, they’re a lot like the numbered line links, but on the other side.

a.cue

@require signs

position absolute

left 100%

width 2.5rem

height 2rem

sign-text-color()

text-align center

&:before

// In Chrome, this is always black, regardless of font color. Yes, it's

// called "BLACK RIGHT-POINTING TRIANGLE", but come on.

content '▶'

& ~ &:not(:hover):not(:active):not(.pointing):before

content '·'

@require pointing

@require colors

+user_pointing_at(also: '&.current')

background $contextColor

This doesn’t really belong here, but I’m prototyping it. This is basically a modified copy of the anchor lane. I’m not settled on a color for it.

@import colors

@import paper

@import docking

$resourcesLaneColor = lighten(desaturate($resourcesColor, 75%), 25%)

$resourcesLaneColor = blend($paperColor, $resourcesColor, .5)

$resourcesLaneColor = desaturate(blend($paperColor, $resourcesColor, .33), 50%)

$resourcesLaneWidth = 2.5rem

.scene:after

content ''

dock-left(left: 100%)

width $resourcesLaneWidth

background-color $resourcesLaneColor

z-index -5 // get below scene's box shadow

box-shadow .25em 0 .5em -.25em #333

box-sizing border-box // or the shadow doesn't pick up pointer events

border-radius 0 4px 4px 0

10 the console

So far, the player has been invisible. You can hear it, but you can’t see it. You can invoke it by using listen links, but you can’t control—or know about it—it any other way. This is useful up to a point, and certainly free of clutter. But it’s a bit like a spirit singing into your ear. At the very least, how do you tell it to stop!

The console is the visible part of the player—a place where you can directly see and manipulate the player’s state.

The console may or may not end up being needed on any given visit. But there’s no harm in creating it right away.

<div id="the-player-console" class="player-console">

<div class="player-state-control"></div>

<div class="player-feedback"></div>

</div>

This is added when the audio module is loaded, and these elements will be re-used throughout the lifetime of the page.

The player console must always be available, and in a consistent location—yet not intrusive. I strenuously avoid the use of topmost fixtures, but for this case, I make an exception. It sits in the bottom-right corner of the screen, this being the most out-of-the-way place.

#the-player-console

position fixed

z-index 999 // on top of everything else

bottom .5rem

right @bottom

The console is not visible until the player has been used—in other words, it’s

in the playing or paused state.

.player-console:not(data-player-state)

display none

But you do need a way to dismiss it, which would amount to stopping playback and hiding it.

Space is at a premium. You can’t “move” the player console out of the way. So its contents should be very minimal.

The main thing on the console is a button for changing the state of the player.

@import colors

@import signs

@import thumb-metrics

.player-state-control

cursor pointer

circle($thumbRems)

color $signTextColor

box-shadow 0 0 .5em -.2em #111

<<player control style rules>>

// Just like the console containing it, the button is aligned to the bottom

// right.

position absolute

bottom 0

right 0

background rgba($resourcesColor, .8)

@require pointing

+user_pointing_at()

background $resourcesLightColor

Since “play” and “pause” apply in mutually exclusive states, there’s only one button, which “flips” between these commands when the playback state changes.

@import arrows

@import centering

font-size 150%

transition .2s

&:before, &:after

content ''

position absolute

transform rotateY(90deg)

// Show a "play" icon when paused

[data-player-state="paused"] &

transform rotateY(360deg)

+arrow-before-pointing(right)

full-center()

margin-left .5em

// Show a "pause" icon when playing

[data-player-state="playing"] &

transform rotateY(180deg)

&:before, &:after

full-center()

background $signTextColor

width .5em

height 1.5em

&:before

margin-left -.4em

&:after

margin-left .4em

There is a third state, which you may be familiar with: it’s called “waiting.” The player is in this state when it’s supposed to be playing, but hasn’t loaded enough of the media file yet.

[data-player-state="waiting"] &

// `both` fill mode doesn't really work, I think because the other states

// use transitions instead of animations.

animation 2s ease-in-out infinite both player-waiting

&:before

full-center()

line-height .8

text-align center

content 'loading\000a...'

font-size 80%

@keyframes player-waiting

0%

transform rotateY(0)

100%

transform rotateY(360deg)

(() => {

// DON'T overload `console` here. This should be the player. Put the

// various markup bits into one container, including the media player.

const _console = document.querySelector('#the-player-console');

const control = document.querySelector('.player-state-control');

const STATE = 'data-player-state';

const audio = get_media_player();

// As elsewhere, using `duration` as a proxy for "is loaded".

on(audio, 'pause', () => _console.setAttribute(STATE, 'paused'));

on(audio, 'playing', () => _console.setAttribute(STATE, 'playing'));

intent_to_listen.on(() => _console.setAttribute(STATE, audio.duration? 'playing' : 'waiting'));

on(control, 'click', () => {

// Assume the player is "paused" if it's not in any state yet.

const state = _console.getAttribute(STATE) || 'paused';

if (state == 'playing') {

if (audio) audio.pause();

} else

intent_to_listen.fire();

});

}());

There’s some asymmetry between “play” and “pause.” That’s because you can always pause instantly, whereas there may be a delay between the intent to listen and the actual start of playback.

The player controls are a human interface. A human interface should always be built on top of a machine interface. In any case, the objective is to manipulate the state of the player.

11 incidentals

A few generic items are used here. They may move to a common module.

11.1 functions

11.1.1 event handlers

function on(element, events, handler) {

events.split(',').forEach(

event => element.addEventListener(event, handler));

}

function off(element, events, handler) {

events.split(',').forEach(

event => element.removeEventListener(event, handler));

}

11.1.2 memoize

Cache single-arity functions.

function memoize(f) {

const cache = {};

return key => {

if (!cache.hasOwnProperty(key))

cache[key] = f(key);

return cache[key];

}

}

11.1.3 get JSON

This is used to get cue data. It’s likely to be wanted in another module.

function get_json(path) {

return getflow.GET(path)

.then(request => JSON.parse(request.responseText));

}

11.1.4 array from

I stole this from getflow, but its version is called make_array.

function array_from(array_like_thing) {

return array_like_thing instanceof Array?

array_like_thing

: [].slice.call(array_like_thing);

}

11.1.5 resolve now

This is a generic thing I took from getflow. It’s just a shorthand for readability.

function resolve_now(value) {

return Promise.resolve(value);

}

11.1.6 constants

These constants are mostly for readability. Their actual values don’t matter here, just their names.

const HAVE_FUTURE_DATA = 3;

//const EVENT_FROM_NATIVE = -1;

11.1.7 next time (one-off events)

This is a fairly generic one-off pattern. It lets you capture just one occurrence of an event, disposing of the handler once that’s done.

function next_time(element, event) {

return new Promise(resolve => {

function once() {

off(element, event, once);

resolve();

}

on(element, event, once);

});

}

12 discoverability

After all of this work, wouldn’t it be a shame if nobody used the audio feature because nobody knew that it existed?

Well, I reserved a bit of “breathing room” in the entryway, and if no other feature is hijacking it right now, I’ll use it for this.

#the-works-link

<after>

<div class="works-featured region-message">

<p>You can <a href="/about/the_audio_player">listen and follow along

with</a> the 1962 “Living Shakespeare” recordings of</p>

<ul class="featured-list">

<li><a href="/plays/MND/1.1?listen=Living-MND" class="featured-link"><i>A

Midsummer Night’s Dream</i></a></li>

<li><a href="/plays/Ado/1.1?listen=Living-Ado"

class="featured-link"><i>Much Ado About Nothing</i></a></li>

<li><a href="/plays/MV/1.1?listen=Living-MV" class="featured-link"><i>The

Merchant of Venice</i></a></li>

</ul>

<p>Coming soon for other plays.</p>

</div>

</after>

My wife didn’t like the “buttons” as part of the inline text, so I made them a list. I think it’s better.

@import colors

@import arrows

@import pointing

@import plain_list

// Not relevant here, but this looks bad in many cases.

#Shakespeare-region-message

hyphens none

.works-featured

.featured-list

plain-list()

.featured-link

display inline-block

margin-bottom .2em

color rgba(white, .8)

padding .25em

border-radius .5em

box-shadow 1px 1px 2px #111

background rgba($worksColor, .6)

background rgba($resourcesColor, .6)

display inline-flex

align-items center

+arrow-after-pointing(right, size: .5em)

display inline-block

margin-left .5em

+user_pointing_at()

background $contextColor

13 roadmap

Life’s but a walking shadow, a poor player

That struts and frets his hour upon the stage

And then is heard no more.

Macbeth

13.1 visibility of the player

Currently, the player never appears preëmptively. It only appears as the result of a user intent to listen. And “listen” buttons are only present in places where there is audio.

For example, because most recordings of the plays are based on highly trimmed-down texts, there will be many places where no “listen” buttons are seen. Of course, that correctly reflects the availability of the audio.

But in such cases, it may still be useful to have the player appear in some small form as an indication of audio being available for the more general area that you’re visiting.

Notwithstanding that, I’m still leery about this because the player is a topmost fixture. Topmost fixtures are annoying unless used very unintrusively.

Keep in mind that, in cases such as the above example, playback would have to take you somewhere else, anyway.

13.2 remote listen links

There are two kinds of listen links:

- at the place where you can listen to something

- by the sign pointing to such a place

The audio module can add the first kind generically, by scanning the current section for anchors matching cues.

The second kind is more ad-hoc. The proposal is to add a second, “listen” link to links that target locations with audio.

It would make sense to do this only when audio is known to be available for the target location. For example, a link to a play would be eligible for a listen link when any audio is known to be available for the play. Same for specific scenes, and specific lines. The latter two cases require the cues data; the former, the audio index.

It would only make sense to augment the link if there were cues indicating audio were available for that specific location.

This is about links to an audio scope from outside of itself.

If you link to an audio scope from outside of that scope, then you’d need some other way to know that an audio program were available—if you wanted to be able to add audio to the links.

But why and how would you alter existing links, anyway? The link will take you to that place, where you’ll be in scope and then see the link in-place.

How often would you link from one scope to another? Rarely, it seems to me. And in many of those cases (i.e. documents, or curated points), you could do the necessary lookup in a build preprocess.

And would those augmented links actually start playback? I suppose the question is the same for the in-place links.

13.3 use for screen readers

Screen reader support could presumably be improved with use of the relevant standards (i.e. ARIA).

14 API

return {

get_player: get_media_player,

state: the_player_state,

await_catalog,

intent_to_listen,

get_cues_for,

cue_link_for

};

Footnotes:

“conduct”, Online Etymology Dictionary

“WebVTT - Web Video Text Tracks”, caniuse.com

§ “User Control of Downloads Over Cellular Networks”, “iOS-Specific Considerations”, /Safari Developer Library. Copyright © 2012, Apple, Inc.

See also Aaron Gloege, “Overcoming iOS HTML5 audio limitations: Solutions and workarounds for mobile Safari”. IBM developerWorks®. October 2012.

§ “In Submissions”, “Hacker News Guidelines”, Y Combinator.